Image by author

In Python, caching can be used to store the results of expensive function calls and reuse them when the function is called again with the same arguments. This can significantly enhance your code’s performance.

Python offers built-in support for caching through the functools module, specifically with the @cache and @lru_cache decorators. This tutorial will teach you how to cache function calls effectively.

Why is Caching Useful?

Caching function calls can greatly improve your code’s performance. Here are a few reasons why caching function calls can be beneficial:

- Performance Improvement: When a function is called multiple times with the same arguments, caching the result can eliminate redundant calculations. Instead of recalculating the result each time, the cached value can be returned, speeding up execution.

- Resource Utilization Reduction: Some function calls can be computationally intensive or require significant resources (such as database queries or network requests). Caching the results reduces the need to repeat these operations.

- Enhanced Responsiveness: In applications where responsiveness is crucial, such as web servers or GUI applications, caching can help reduce latency by avoiding repeated calculations or I/O operations.

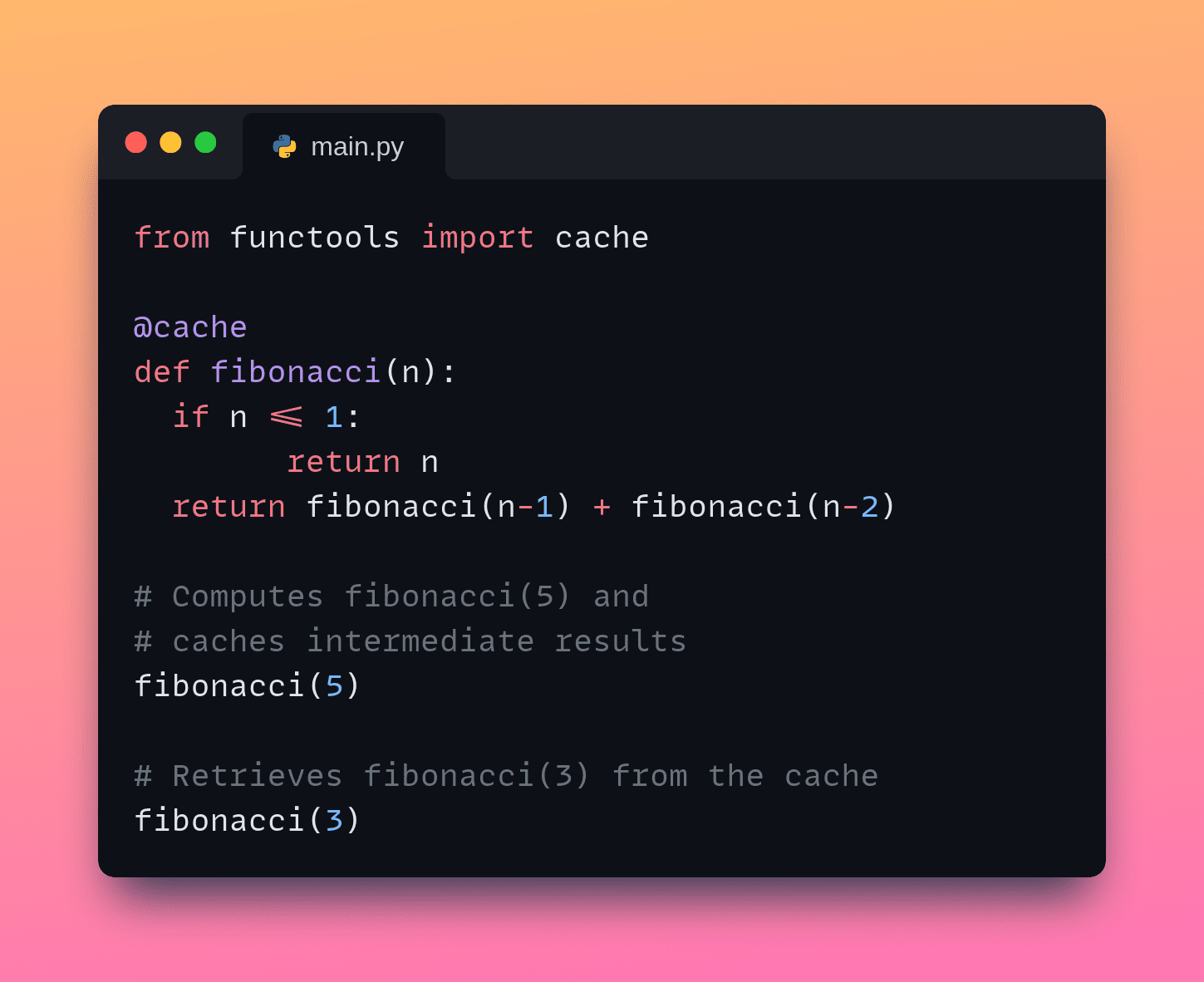

Caching with the @cache Decorator

Let’s code a function that computes the n-th Fibonacci number. Here is the recursive implementation of the Fibonacci sequence:

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)

Without caching, recursive calls lead to redundant calculations. By caching values, it would be much more efficient to look up the cached values. For this, you can use the @cache decorator.

The @cache decorator from the functools module in Python 3.9+ is used to cache function results. It works by storing the results of expensive function calls and reusing them when the function is called with the same arguments. Now, let’s wrap the function with the @cache decorator:

from functools import cache

@cache

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)

We will revisit performance comparisons later. Now, let’s look at another way to cache function return values using the @lru_cache decorator.

Caching with the @lru_cache Decorator

You can also use the functools.lru_cache decorator for caching. This uses the Least Recently Used (LRU) caching mechanism for function calls. In LRU caching, when the cache is full and a new item needs to be added, the least recently used item in the cache is removed to make room for the new item. This ensures that the most frequently used items are retained in the cache, while the least frequently used items are discarded.

The @lru_cache decorator is similar to @cache but allows you to specify the maximum size of the cache using the maxsize argument. Once the cache reaches this size, the least recently used items are removed. This is useful if you want to limit memory usage.

Here, the fibonacci function caches up to 7 most recently computed values:

from functools import lru_cache

@lru_cache(maxsize=7) # Cache up to 7 most recent results

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)

fibonacci(5) # Computes Fibonacci(5) and caches intermediate results

fibonacci(3) # Retrieves Fibonacci(3) from the cache

Here, the fibonacci function is decorated with @lru_cache(maxsize=7), specifying that it should cache up to 7 most recent results.

When fibonacci(5) is called, the results for fibonacci(4), fibonacci(3), and fibonacci(2) are cached. When fibonacci(3) is called subsequently, fibonacci(3) is retrieved from the cache since it was one of the seven most recently computed values, thus avoiding redundant calculations.

Timing Function Calls for Comparison

Let’s compare the execution times of functions with and without caching. For this example, we won’t set an explicit value for maxsize. So, maxsize will be set to the default value of 128:

from functools import cache, lru_cache

import timeit

# without caching

def fibonacci_no_cache(n):

if n <= 1:

return n

return fibonacci_no_cache(n-1) + fibonacci_no_cache(n-2)

# with cache

@cache

def fibonacci_cache(n):

if n <= 1:

return n

return fibonacci_cache(n-1) + fibonacci_cache(n-2)

# with LRU cache

@lru_cache

def fibonacci_lru_cache(n):

if n <= 1:

return n

return fibonacci_lru_cache(n-1) + fibonacci_lru_cache(n-2)

To compare execution times, we will use the timeit function from the timeit module:

# Compute the n-th Fibonacci number

n = 35

no_cache_time = timeit.timeit(lambda: fibonacci_no_cache(n), number=1)

cache_time = timeit.timeit(lambda: fibonacci_cache(n), number=1)

lru_cache_time = timeit.timeit(lambda: fibonacci_lru_cache(n), number=1)

print(f"Time without cache: {no_cache_time:.6f} seconds")

print(f"Time with cache: {cache_time:.6f} seconds")

print(f"Time with LRU cache: {lru_cache_time:.6f} seconds")

Running the above code should yield a result similar to:

Output >>>

Time without cache: 2.373220 seconds

Time with cache: 0.000029 seconds

Time with LRU cache: 0.000017 seconds

We observe a significant difference in execution times. The function call without caching takes much longer to execute, especially for higher values of n. In contrast, the cached versions (both @cache and @lru_cache) execute much faster and have comparable execution times.

Wrapping Up

By using the @cache and @lru_cache decorators, you can significantly speed up the execution of functions that involve expensive calculations or recursive calls. You can find the complete code on GitHub.

If you’re looking for a comprehensive guide on best practices for using Python in data science, read 5 Python Best Practices for Data Science.

Bala Priya C is an Indian developer and technical writer. She enjoys working at the intersection of mathematics, programming, data science, and content creation. Her areas of interest and expertise include DevOps, data science, and natural language processing. She loves reading, writing, coding, and drinking coffee! Currently, she is focused on learning and sharing her knowledge with the developer community by creating tutorials, how-to guides, opinion pieces, and more. Bala also creates engaging resource roundups and coding tutorials.