Decision Trees for the Real World

Introduction

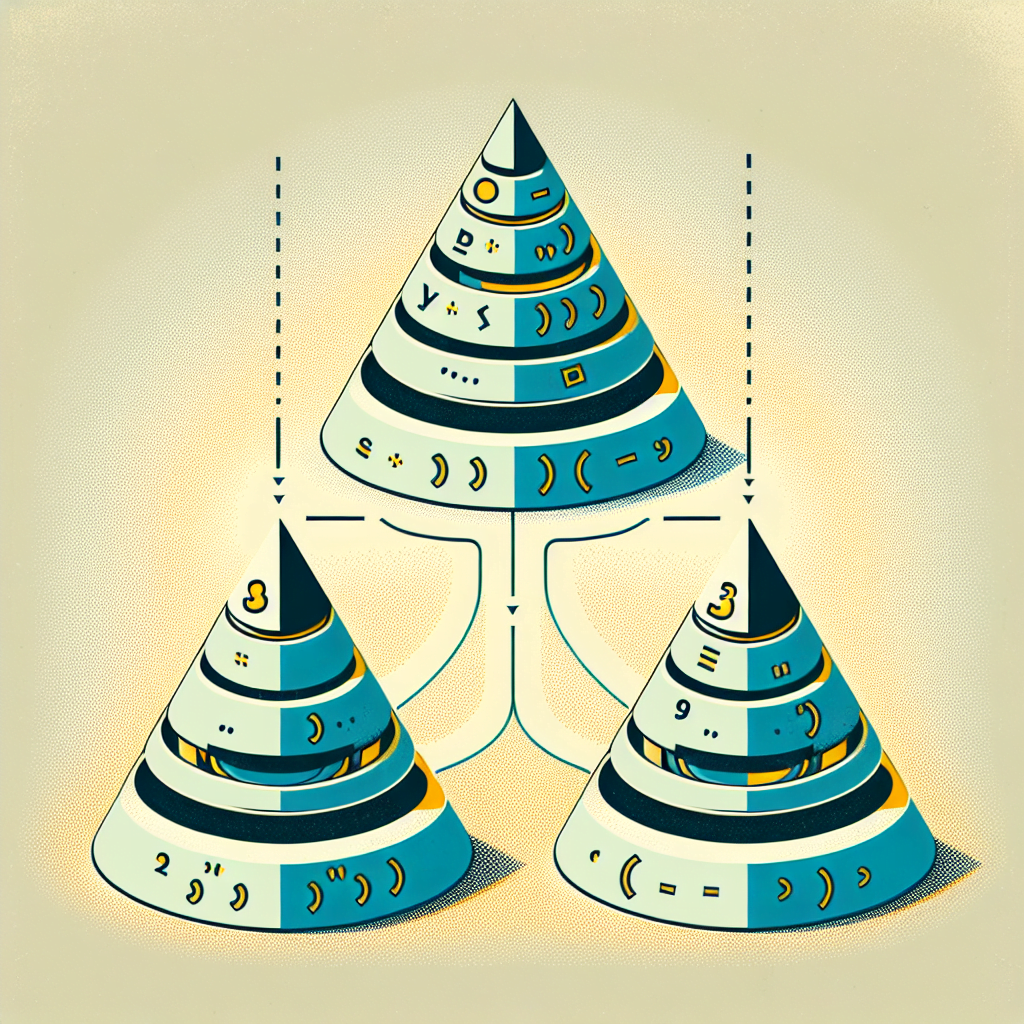

Image by author

Decision trees break down complex decisions into simple, sequential steps, mimicking human decision-making processes. In data science, these powerful tools are widely used to facilitate data analysis and guide decision-making. This article will explore how decision trees work, provide real-world examples, and offer tips for improving them.

Structure of Decision Trees

At their core, decision trees are straightforward and intuitive tools. They break down tough choices into simpler, sequential decisions, reflecting human decision-making. Let’s delve into the main components of a decision tree.

Nodes, Branches, and Leaves

A decision tree is defined by three basic components: nodes, branches, and leaves. Each plays a crucial role in the decision-making process.

- Nodes: These are decision points where the tree makes decisions based on input data. The root node is the starting point when representing all data.

- Branches: These represent the outcomes of decisions and connect nodes. Each branch corresponds to a potential outcome or value of a decision node.

- Leaves: The endpoints of the decision tree are leaves, also known as leaf nodes. Each leaf node represents a specific outcome or label, reflecting the final decision or classification.

Conceptual Example

Imagine deciding whether to go outside based on the weather. The root node might ask, "Is it raining?" If yes, a branch might lead to "Take an umbrella." If no, another branch might suggest "Wear sunglasses." These structures make decision trees easy to interpret and visualize, making them popular in various fields.

Real-World Example: Loan Approval Adventure

Consider this scenario: you’re a wizard at Gringotts Bank, deciding who gets a loan for a new broomstick.

- Root Node: "Is their credit score magical?"

- If yes → Branch to "Approve faster than you can say Quidditch!"

- If no → Branch to "Check their goblin gold reserves."

- If high → "Approve, but keep an eye on them."

- If low → "Deny faster than a Nimbus 2000."

import pandas as pd<br /> from sklearn.tree import DecisionTreeClassifier<br /> from sklearn import tree<br /> import matplotlib.pyplot as plt<br /> <br /> data = {<br /> 'Credit_Score': [700, 650, 600, 580, 720],<br /> 'Income': [50000, 45000, 40000, 38000, 52000],<br /> 'Approved': ['Yes', 'No', 'No', 'No', 'Yes']<br /> }<br /> <br /> df = pd.DataFrame(data)<br /> <br /> X = df[['Credit_Score', 'Income']]<br /> y = df['Approved']<br /> <br /> clf = DecisionTreeClassifier()<br /> clf = clf.fit(X, y)<br /> <br /> plt.figure(figsize=(10, 8))<br /> tree.plot_tree(clf, feature_names=['Credit_Score', 'Income'], class_names=['No', 'Yes'], filled=True)<br /> plt.show()<br /> ```<br /> <br /> <br /> <br /> When you run this code, an actual decision tree appears, showing how decisions are made based on credit score and income.<br /> <br /> #### Decision Trees: Behind the Branches<br /> <br /> Decision trees operate similarly to a flowchart or tree structure, with a series of decision points. They start by splitting a dataset into smaller parts and then build a decision tree to accompany it. Let's explore how these trees handle data splitting and different variables.<br /> <br /> ##### Splitting Criteria: Gini Impurity and Information Gain<br /> <br /> Choosing the best quality to split the data is the primary goal of building a decision tree. This process can be determined using criteria provided by Information Gain and Gini Impurity.<br /> <br />

- Gini Impurity: Measures the frequency of incorrect guesses if you randomly select a label. Lower Gini Impurity means better guesses and a happier tree.

- Information Gain: Measures how much an attribute helps to resolve the case. Higher information gain means a more insightful tree.

Handling Continuous and Categorical Data

Decision trees can handle both continuous and categorical data. For continuous features like age or income, the tree sets up a radar: "All those over 30, this way!" For categorical data like gender or product type, it’s more like a range: "Smartphones to the left, laptops to the right!"

Concrete Example: Customer Purchase Predictor

To better understand how decision trees work, let’s look at an example: using a customer’s age and income to predict if they will buy a product.

import pandas as pd<br /> from sklearn.tree import DecisionTreeClassifier<br /> from sklearn import tree<br /> import matplotlib.pyplot as plt<br /> <br /> data = {<br /> 'Age': [25, 45, 35, 50, 23],<br /> 'Income': [50000, 100000, 75000, 120000, 60000],<br /> 'Purchased': ['No', 'Yes', 'No', 'Yes', 'No']<br /> }<br /> <br /> df = pd.DataFrame(data)<br /> <br /> X = df[['Age', 'Income']]<br /> y = df['Purchased']<br /> <br /> clf = DecisionTreeClassifier()<br /> clf = clf.fit(X, y)<br /> <br /> plt.figure(figsize=(10, 8))<br /> tree.plot_tree(clf, feature_names=['Age', 'Income'], class_names=['No', 'Yes'], filled=True)<br /> plt.show()<br /> ```<br /> <br /> <br /> <br /> The final decision tree will show how the tree splits based on age and income to determine if a customer is likely to buy a product. Each node is a decision point, and the branches display different outcomes. The final decision is indicated by the leaf nodes.<br /> <br /> #### Real-World Applications<br /> <br /> <br /> <br /> This project is designed as a take-home assignment for Meta (Facebook) data science positions. The goal is to create a classification algorithm that predicts if a movie on Rotten Tomatoes is labeled "Rotten," "Fresh," or "Certified Fresh."<br /> <br /> Here's the link to the project: [Rotten Tomatoes Movies Rating Prediction](https://platform.stratascratch.com/data-projects/rotten-tomatoes-movies-rating-prediction?utm_source=blog&utm_medium=click&utm_campaign=kdn+decision+trees+for+real+world)<br /> <br /> ##### Step-by-Step Solution<br /> <br /> 1. **Data Preparation**: Merge the two datasets on the `rotten_tomatoes_link` column to get a complete dataset with movie information and reviews.<br /> 2. **Feature Selection and Engineering**: Select relevant features and perform necessary transformations, including converting categorical variables to numerical ones, handling missing values, and normalizing feature values.<br /> 3. **Model Training**: Train a decision tree classifier on the processed dataset and use cross-validation to evaluate the model's robust performance.<br /> 4. **Evaluation**: Finally, evaluate the model's performance using metrics like accuracy, precision, recall, and F1 score.<br /> <br /> ```python<br /> import pandas as pd<br /> from sklearn.model_selection import train_test_split, cross_val_score<br /> from sklearn.tree import DecisionTreeClassifier<br /> from sklearn.metrics import classification_report<br /> from sklearn.preprocessing import StandardScaler<br /> <br /> movies_df = pd.read_csv('rotten_tomatoes_movies.csv')<br /> reviews_df = pd.read_csv('rotten_tomatoes_critic_reviews_50k.csv')<br /> <br /> merged_df = pd.merge(movies_df, reviews_df, on='rotten_tomatoes_link')<br /> <br /> features = ['content_rating', 'genres', 'directors', 'runtime', 'tomatometer_rating', 'audience_rating']<br /> target = "tomatometer_status"<br /> <br /> merged_df['content_rating'] = merged_df['content_rating'].astype('category').cat.codes<br /> merged_df['genres'] = merged_df['genres'].astype('category').cat.codes<br /> merged_df['directors'] = merged_df['directors'].astype('category').cat.codes<br /> <br /> merged_df = merged_df.dropna(subset=features + [target])<br /> <br /> X = merged_df[features]<br /> y = merged_df[target].astype('category').cat.codes<br /> <br /> scaler = StandardScaler()<br /> X_scaled = scaler.fit_transform(X)<br /> <br /> X_train, X_test, y_train, y_test = train_test_split(X_scaled, y, test_size=0.3, random_state=42)<br /> <br /> clf = DecisionTreeClassifier(max_depth=10, min_samples_split=10, min_samples_leaf=5)<br /> scores = cross_val_score(clf, X_train, y_train, cv=5)<br /> print("Cross-validation scores:", scores)<br /> print("Average cross-validation score:", scores.mean())<br /> <br /> clf.fit(X_train, y_train)<br /> <br /> y_pred = clf.predict(X_test)<br /> <br /> classification_report_output = classification_report(y_test, y_pred, target_names=['Rotten', 'Fresh', 'Certified-Fresh'])<br /> print(classification_report_output)<br /> ```<br /> <br /> <br /> <br /> The model shows high accuracy and F1 scores across all classes, indicating good performance. Let's summarize the key takeaways.<br /> <br /> #### Key Takeaways<br /> <br /> 1. **Feature Selection**: Crucial for model performance. Runtime and director ratings were valuable indicators.<br /> 2. **Decision Tree Classifier**: Effectively captures complex relationships in movie data.<br /> 3. **Cross-Validation**: Ensures model reliability across different data subsets.<br /> 4. **Class Imbalance**: High performance in the "Certified Fresh" class warrants further investigation.<br /> 5. **Real-World Application**: Promising for predicting movie ratings and enhancing user experience on platforms like Rotten Tomatoes.<br /> <br /> #### Enhancing Decision Trees: Turning Your Sapling into a Mighty Oak<br /> <br /> You've built your first decision tree. Impressive! But why stop there? Let's turn this tree into a forest giant that would make even Groot jealous. Ready to beef up your tree? Let's go!<br /> <br /> ##### Pruning Techniques<br /> <br /> Pruning reduces the size of a decision tree by removing parts that have minimal predictive power, helping to reduce overfitting.<br /> <br /> - Pre-Pruning: Also known as early stopping, this involves halting the tree’s growth early. Model parameters like maximum depth (

max_depth), minimum samples required to split a node (min_samples_split), and minimum samples required at a leaf node (min_samples_leaf) are specified before training. This prevents the tree from becoming overly complex. - Post-Pruning: This method grows the tree to its maximum depth and then removes nodes that offer little power. Although more computationally intensive than pre-pruning, post-pruning can be more effective.

Ensemble Methods

Ensemble techniques combine multiple models to produce superior performance compared to any single model. Two main forms of ensemble techniques applied to decision trees are bagging and boosting.

- Bagging (Bootstrap Aggregation): This method trains multiple decision trees on different subsets of data (generated by sampling with replacement) and then averages their predictions. A commonly used bagging technique is Random Forest. This reduces variance and helps prevent overfitting. Check out "Decision Tree and Random Forest Algorithm Explained" for an in-depth look at the Decision Tree algorithm and its extension, the Random Forest algorithm.

- Boosting: Boosting creates trees sequentially, with each tree trying to correct the errors of the previous one. Boosting techniques include algorithms like AdaBoost and Gradient Boosting. By focusing on hard-to-predict examples, these algorithms often produce more accurate models.

Hyperparameter Tuning

Hyperparameter tuning is the process of finding the optimal set of hyperparameters for a decision tree model to enhance its performance. This can be achieved using methods like grid search or random search, where multiple combinations of hyperparameters are evaluated to identify the best configuration.

Conclusion

In this article, we discussed the structure, working mechanism, real-world applications, and methods to improve the performance of decision trees. Practicing decision trees is crucial for mastering their use and understanding their nuances. Working on real-world data projects can also provide valuable experience and enhance problem-solving skills.

Nate Rosidi is a data scientist and product strategy consultant. He is also an adjunct professor teaching analytics and the founder of StrataScratch, a platform that helps data scientists prepare for interviews with real interview questions asked by top companies. Nate writes about the latest career market trends, provides interview tips, shares data science projects, and covers everything SQL.

Follow Nate on Twitter.