The AIhub Coffee Corner captures the insights of AI experts during brief discussions. Recently, numerous articles have been published about the decline of the generative AI hype. Our experts ponder whether the bubble has burst. This time, joining the conversation are Tom Dietterich (Oregon State University), Sabine Hauert (University of Bristol), Michael Littman (Brown University), and Marija Slavkovik (University of Bergen).

Sabine Hauert: There have been several recent articles in major media outlets discussing whether AI has failed to generate profits and if it might be just hype or a bubble. What are your thoughts?

Marija Slavkovik: There’s an article by Cory Doctorow that questions what kind of bubble AI is. I appreciate his perspective that many bubbles come and go; some leave something useful, while others only generate short-term benefits, like significant profits for investment bankers. It’s like a wave, hitting different targets at different times. I’m confident there are areas where this wave is just arriving and others where it’s already passed. It’s a commercial question. I’ve seen a lot of research at IJCAI this year, and I expect the same for ECAI, focusing on LLMs for various applications. So, I wouldn’t say it’s gone. Many researchers are doing small, interesting projects with LLMs. We haven’t seen grants involving LLMs yet, as the process takes a year, but I expect to see many LLM-related projects in the next grant cycle. So, the question is, for whom has the bubble burst? Perhaps for journalistic interest.

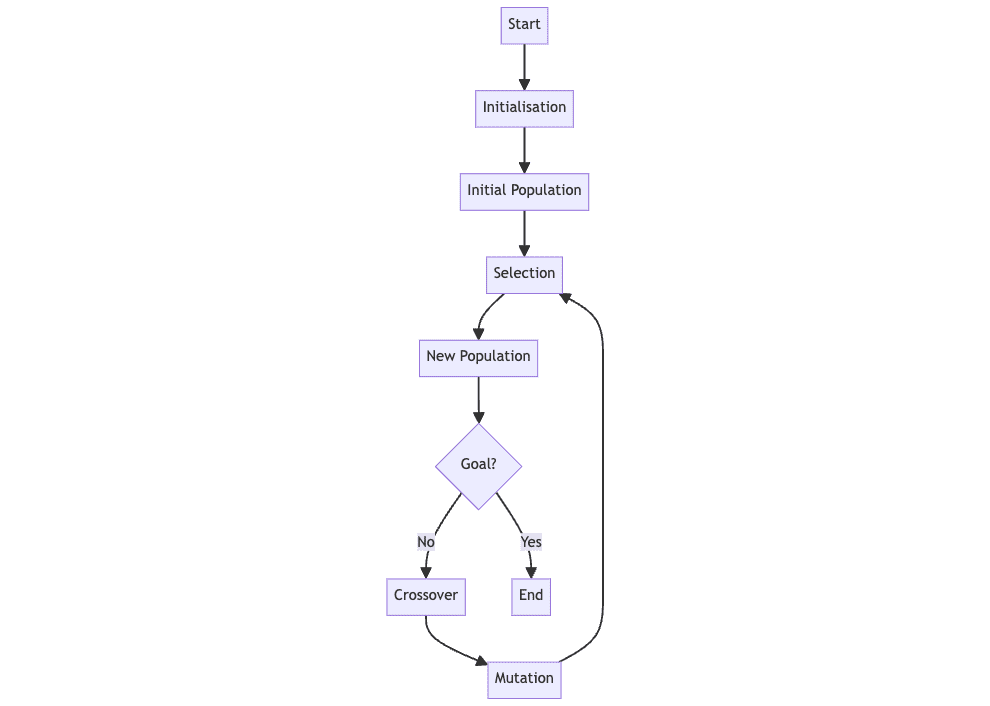

Tom Dietrich: The narrative is always about the new wave, then the backlash, then the counter-backlash, so the anti-AI hype is rising now. Some find this narrative useful and promote it. There’s a famous diagram showing how research and application trajectories often oscillate between government and industry funding before significantly impacting the market. It usually takes about 20 years from an initial research breakthrough to substantial success in the private sector. With LLMs, I’m not sure if we know the killer application yet. Everyone’s asking what LLMs will be truly useful for. Many companies are still figuring this out. Realistically, it might take another nine or even 19 years to understand, especially since this technology is unlike anything we’ve had before. It has incredible scale but superficial understanding. I attended a workshop at Princeton where people from academia and industry discussed programming frameworks for systems using LLMs as components. It was interesting, but these platforms still feel like academic curiosities. We don’t know what’s happening inside companies, except that, for example, Llama2 7B has been downloaded about 250,000 times. Everyone’s experimenting with these things.

Sabine: Maria mentioned we’ll see which grants get funded. Michael, do you still invest in things when the hype is dying down, or is that when the industry should get involved? How do you sustain this, essentially?

Michael Littman: There’s a time scale difference, as Tom mentioned, between academia’s "digestive system" and the business world. Business is about buying low and selling high, but you can also profit from buying high and selling low if you time it right. But there’s no "buy medium, sell medium"… they only profit when things change. So, there must be a narrative of change that’s crucial for them. If they need to make money quarterly, there must be faster changes. In academia, we absorb these ideas and consider them. If you look at conferences and grant proposals, the field has largely adopted this and is doing due diligence to understand what’s happening. What’s the science here? What’s the capability we didn’t have before? How can we strip away the excess and get to the core idea (because that’s what allows us to apply it broadly and generally)?

What I see is that it’s great to see what companies invest in, but often, grant systems need to be somewhat counter-cyclical because when companies move on, it’s crucial for researchers building these foundations to explore these ideas. At the National Science Foundation, the director often mentions that NSF continued funding AI even during AI winters. Companies said, "We won’t touch this with a ten-foot pole," but government support continued, likely in other countries too. So, I don’t think it changes where we are as researchers, except for those wearing dual hats, trying to make money in business while doing research. But for the field as a whole, I don’t see a slowdown; I see a steady, nice increase in people genuinely grappling with the issues.

Sabine: It’s good to see the positivity and continuity of this funding. What do you think causes the noise on the industry side? Did we overpromise? Are they seeing the reality of what this technology can do now, or is it still overall positive?

Marija: Totally uninformed opinion, but Amazon poured $4 billion into Anthropic, and it hasn’t outperformed GPT-4. If I had to guess the source of this nervousness, $4 billion is a lot of money, and it hasn’t resulted in better performance, or at least we haven’t seen anything in the media about it.

Tom: That’s true; we’ve seen several models that match GPT-4, but we haven’t seen an increase like we did from GPT-3 to GPT-4. Speculation abounds, but the absence of evidence is not evidence of absence.

I had a question for you, Marija, regarding the waves crashing in machine learning, computer vision, and natural language. It’s been a massive wave. Where are we, for example, in robotics and multi-agent systems?

Marija: People are adopting LLMs for small tasks, so they’re becoming hybrid. You use a bit of LLM somewhere and integrate it with the status quo. So, it’s interesting.

Tom: One of my colleagues, Jonathan Hurst, works at Agility Robotics and sees other robotics startups raising a lot of money just by putting LLMs on their robots and being able to say, "pick up the red cup." We’re essentially back to SHRDLU but with a real robot. He finds it ridiculous because these people raise three or four times more money than him, but their robots aren’t as good in his opinion. So, there’s some business savvy with these startups.

Sabine: Robotics grounds things because you need them to accomplish specific tasks. Instead of becoming general, you ask, "what can this thing be useful for in this small corner of the world I’m working in with robotics?" I think this could be useful for many real-world LLM applications. They’ve gone too far with "it can do everything," but it can be very useful in specific contexts when better framed. And maybe that’s something robotics can offer: a bit of grounding.

Tom: Meanwhile, we’re seeing the ideas behind diffusion models popping up everywhere. These could be just as intellectually interesting. For example, people use diffusion models to represent policies for controllers. There’s also work in molecular design, material design, and architectural design. Much of this is based on similar ideas, and if I were betting on where the most money will be made, I think it will be in atoms. So, biology and materials science more than language. With language, it’s a kind of universal interface, so it’ll be an interface layer, but I think the huge multi-order-of-magnitude improvements will come from applications in fluid dynamics, like weather and molecular dynamics simulations. These models allow people to do things on time scales several orders of magnitude longer than before. There are huge advances in this area, but they remain somewhat under the media radar.

Michael: Sounds like you’re optimistic about using the Adam optimizer as an atom optimizer.

Tom: Ha! Something like that.

Sabine: Any final thoughts?

Michael: A colleague of mine, Eugene Charniak, retired from the department a few years ago and then started working on a book in retirement, but he sadly passed away. But he had nearly finished the book, so I worked with the publisher, MIT Press, to prepare the book, and it should be out in October. The book’s premise is essentially that now that we have these large AI models trained by machine learning, the AI field can finally begin. He didn’t see this moment as the end of our story but as if everything until now was just a prologue, and now the story can actually start. I think it’s a really exciting way to look at things. I’m not sure I agree 100% because I really like a lot of things that have already happened. But it’s a really interesting perspective suggesting we might have a new conceptual framework for thinking about everything we’ve tackled in AI: learning, planning, decision-making, reasoning. All these things could potentially have a new foundation, and that will only happen if researchers buckle down and understand what this thing is and how we put the pieces together. So, I think it’s super exciting.

Marija: I’m simply fascinated by people’s willingness to believe, right? I mean, like the willingness to believe we have computers that talk and reason. It’s just out of this world. I expect it from people who don’t do anything in computing. But then I sit at tables with people who understand a bit of computing, and then they say, "but it reasons." So, I’d say it’s not over until there’s real reasoning.

Sabine: And I’ll plug us [AIhub] because I think this positive trajectory says it’s just the beginning of things and that we need to build on this foundation. We need several years of funding to really reach real-world applications with breakthroughs, which also means we need to communicate our research better. I think there are exaggerated expectations about what the technology can do, because of everything Marija says. And maybe that’s the role we play – grounding it a bit.

Tags: coffee corner

AIhub is dedicated to providing free, high-quality information on AI.