By Qi Pang

Recent advancements in generative models have enabled AI-generated text, code, and images to closely resemble human-created content across various applications. Watermarking, a method that embeds information into a model’s output to authenticate its origin, seeks to prevent the misuse of AI-generated content. Current leading watermarking techniques incorporate watermarks by slightly altering the probabilities of the language model’s output tokens, detectable through statistical testing during verification.

Regrettably, our research reveals that common design choices in language model watermarking schemes make these systems unexpectedly vulnerable to watermark removal or spoofing attacks, resulting in fundamental trade-offs in robustness, utility, and usability. To address these trade-offs, we thoroughly examine a set of straightforward yet effective attacks on typical watermarking systems and propose guidelines and defenses for practical LLM watermarking.

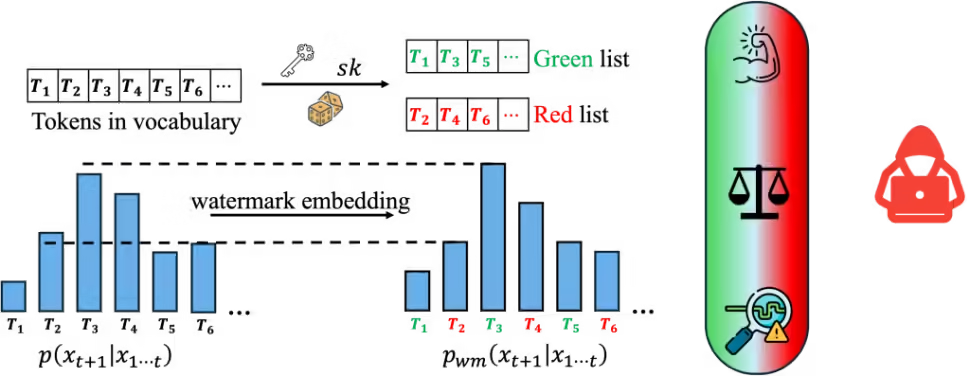

Table 1. Examples generated using LLAMA-2-7B with/without the KGW watermark under various attacks. The watermark divides the vocabulary into green and red lists, favoring words in the green list. The Z-score indicates the detection confidence of the watermark, and perplexity (PPL) assesses text quality. (a) In the piggyback spoofing attack, we exploit watermark robustness by generating incorrect content that appears watermarked (matching the Z-score of the watermarked baseline), potentially harming the LLM’s reputation. Incorrect tokens modified by the attacker are marked in orange, and watermarked tokens in blue. (b-c) In watermark-removal attacks, attackers can effectively lower the Z-score below the detection threshold while maintaining high sentence quality (low PPL) by exploiting either the use of multiple keys or publicly available watermark detection APIs.

What is LLM watermarking?

Similar to image watermarks, LLM watermarking embeds invisible secret patterns into text. Here, we briefly introduce LLMs and LLM watermarks. We use X to denote a sequence of tokens, Xi in mathcal{V} represents the i-th token in the sequence, and mathcal{V} is the vocabulary. M{text{orig}} denotes the original model without a watermark, M{text{wm}} is the watermarked model, and sk in mathcal{S} is the watermark secret key sampled from mathcal{S}.

Language model. State-of-the-art (SOTA) LLMs are auto-regressive models, predicting the next token based on prior tokens. We define language models more formally below:

Definition 1 (Language Model). A language model (LM) without a watermark is a mapping:

[ M{text{orig}}: mathcal{V}^ rightarrow mathcal{V}, ]

where the input is a sequence of length t tokens X. M{text{orig}}(textbf{x}) first returns the probability distribution for the next token, then X{t+1} is sampled from this distribution.

Figure 1. LLM predicts the next tokens auto-regressively.

Watermarks for LLMs. We focus on SOTA decoding-based robust watermarking schemes including KGW, Unigram, and Exp. In each of these methods, the watermarks are embedded by perturbing the output distribution of the original LLM. The perturbation is determined by secret watermark keys held by the LLM owner. Formally, we define the watermarking scheme:

Definition 2 (Watermarked LLMs). The watermarked LLM takes token sequence X in mathcal{V}^ and secret key sk in mathcal{S} as input, and outputs a perturbed probability distribution for the next token. The perturbation is determined by sk:

[ M{text{wm}} : mathcal{V}^* times mathcal{S} rightarrow mathcal{V}, ]

The watermark detection outputs the statistical testing score for the null hypothesis that the input token sequence is independent of the watermark secret key:

[ f{text{detection}} : mathcal{V}^* times mathcal{S} rightarrow mathbb{R}, ]

The output score reflects the confidence of the watermark’s existence in the input.

Figure 2. Watermark is embedded by perturbing the probability distribution of the next token. The perturbation is determined by the secret key sk.

Common design choices of LLM watermarks

There are several common design choices in existing LLM watermarking schemes, including robustness, the use of multiple keys, and public detection APIs that have clear benefits to enhance watermark security and usability. We describe these key design choices briefly below, and then explain how an attacker can easily take advantage of these design choices to launch watermark removal or spoofing attacks.

Robustness. The goal of developing a watermark that is robust to output perturbations is to defend against watermark removal, which may be used to circumvent detection schemes for applications such as phishing or fake news generation. Robust watermark designs have been the topic of many recent works. A more robust watermark can better defend against watermark-removal attacks. However, our work shows that robustness can also enable piggyback spoofing attacks.

Multiple keys. Many works have explored the possibility of launching watermark stealing attacks to infer the secret pattern of the watermark, which can then boost the performance of spoofing and removal attacks. A natural and effective defense against watermark stealing is using multiple watermark keys during embedding, which can improve the unbiasedness property of the watermark (it is called distortion-free in the Exp watermark). Rotating multiple secret keys is a common practice in cryptography and is also suggested by prior watermarks. More keys being used during embedding indicates better watermark unbiasedness and thus it becomes more difficult for attackers to infer the watermark pattern. However, we show that using multiple keys can also introduce new watermark-removal vulnerabilities.

Public detection API. It is still an open question whether watermark detection APIs should be made publicly available to users. Although this makes it easier to detect watermarked text, it is commonly acknowledged that it will make the system vulnerable to attacks. We study this statement more precisely by examining the specific risk trade-offs that exist, as well as introducing a novel defense that may make the public detection API more feasible in practice.

Attacks, defenses, and guidelines

Although the above design choices are beneficial for enhancing the security and usability of watermarking systems, they also introduce new vulnerabilities. Our work studies a set of simple yet effective attacks on common watermarking systems and proposes guidelines and defenses for LLM watermarking in practice.

In particular, we study two types of attacks—watermark-removal attacks and (piggyback or general) spoofing attacks. In the watermark-removal attack, the attacker aims to generate a high-quality response from the LLM without an embedded watermark. For the spoofing attacks, the goal is to generate a harmful or incorrect output that has the victim organization’s watermark embedded.

Piggyback spoofing attack exploiting robustness

More robust watermarks can better defend against editing attacks, but this seemingly desirable property can also be easily misused by malicious users to launch simple piggyback spoofing attacks. In piggyback spoofing attacks, a small portion of toxic or incorrect content is inserted into the watermarked material, making it seem like it was generated by a specific watermarked LLM. The toxic content will still be detected as watermarked, potentially damaging the reputation of the LLM service provider.

Attack Procedure. (i) The attacker queries the target watermarked LLM to receive a high-entropy watermarked sentence X{wm}, (ii) The attacker edits X{wm} and forms a new piece of text X’ and claims that X’ is generated by the target LLM. The editing method can be defined by the attacker. Simple strategies could include inserting toxic tokens into the watermarked sentence X_{wm}, or editing specific tokens to make the output inaccurate. As we show, editing can be done at scale by querying another LLM like GPT4 to generate fluent output.

Results. We show that piggyback spoofing can generate fluent, watermarked, but inaccurate results at scale. Specifically, we edit the watermarked sentence by querying GPT4 using the prompt "Modify less than 3 words in the following sentence and make it inaccurate or have opposite meanings."

Figure 3. Piggyback spoofing on KGW and LLAMA-2-7B. Lower perplexity (PPL) indicates higher sentence quality. Higher z-score reflects higher confidence in watermarking.

Figure 3 shows that we can successfully generate fluent results, with a slightly higher PPL. 94.17% of the piggyback results have a z-score higher than the detection threshold 4. We randomly sample 100 piggyback results and manually check that most of them (92%) are fluent and have inaccurate or opposite content from the original watermarked content. We present a spoofing sentence below. For more results, please check our manuscript.

Watermarked content, z-score: 4.93, PPL: 4.61

Earth has a history of 4.5 billion years and humans have been around for 200,000 years. Yet humans have been using computers for just over 70 years and even then the term was first used in 1945. In the age of technology, we are still just getting started. The first computer, ENIAC (Electronic Numerical Integrator And Calculator), was built at the University of Pennsylvania between 1943 and 1946. The ENIAC took up 1800 sq ft and had 18,000 vacuum tube and mechanical parts. The ENIAC was used for mathematical calculations, ballistics, and code breaking. The ENIAC was 1000 times faster than any other calculator of the time. The first computer to run a program was the Z3, built by Konrad Zuse at his house.

Piggyback attack, z-score: 4.36, PPL: 5.68

Earth has a history of 4.5 billion years and humans have been around for 200,000 years. Yet humans have been using computers for just over 700 years and even then the term was first used in 1445. In the age of technology, we are still just getting started. The first computer, ENIAC (Electronic Numerical Integrator And Calculator), was built at the University of Pennsylvania between 1943 and 1946. The ENIAC took up 1800 sq ft and had 18,000 vacuum tube and mechanical parts. The ENIAC was used for mathematical calculations, ballistics, and code breaking. The ENIAC was 1000 times slower than any other calculator of the time. The first computer to run a program was the Z3, built by Konrad Zuse at his house.

Discussion. Piggyback spoofing attacks are easy to execute in practice. Robust LLM watermarks typically do not consider such attacks during design and deployment, and existing robust watermarks are inherently vulnerable to such attacks. We consider this attack to be challenging to defend against, especially considering the examples presented above, where by only editing a single token, the entire content becomes incorrect. It is hard, if not impossible, to detect whether a particular token is from the attacker by using robust watermark detection algorithms. Recently, researchers proposed publicly detectable watermarks that plant a cryptographic signature into the generated sentence [Fairoze et al. 2024]. They mitigate such piggyback spoofing attacks at the cost of sacrificing robustness. Thus, practitioners should weigh the risks of removal vs. piggyback spoofing attacks for the model at hand.

Guideline #1. Robust watermarks are vulnerable to spoofing attacks and are not suitable as proof of content authenticity alone. To mitigate spoofing while preserving robustness, it may be necessary to combine additional measures such as signature-based fragile watermarks.

Watermark-removal attack exploiting multiple keys

SOTA watermarking schemes aim to ensure the watermarked text retains its high quality and the private watermark patterns are not easily distinguished by maintaining an unbiasedness property:

[ |E{sk in S}[M{text{wm}}(textbf{x}, sk)] – M{text{orig}}(textbf{x})| = O(epsilon), ]

i.e., the expected distribution of watermarked output over the watermark key space sk in S is close to the output distribution without a watermark, differing by a distance of (epsilon). Exp is rigorously unbiased, and KGW and Unigram slightly shift the watermarked distributions.

We consider multiple keys to be used during watermark embedding to defend against watermark stealing attacks. The insight of our proposed watermark-removal attack is that, given the “unbiased” nature of watermarks, malicious users can estimate the output distribution without any watermark by repeatedly querying the watermarked LLM using the same prompt. As this attack estimates the original, unwatermarked distribution, the quality of the generated content is preserved.

Attack procedure. (i) An attacker queries a watermarked model with an input X multiple times, observing n subsequent tokens X{t+1}. (ii) The attacker then creates a frequency histogram of these tokens and samples according to the frequency. This sampled token matches the result of sampling on an unwatermarked output distribution with a nontrivial probability. Consequently, the attacker can progressively eliminate watermarks while maintaining a high quality of the synthesized content.

Results. We study the trade-off between resistance against watermark stealing and watermark-removal attacks by evaluating a recent watermark stealing attack [Jovanović et al. 2024], where we query the watermarked LLM to obtain 2.2 million tokens to “steal” the watermark pattern and then launch spoofing attacks using the estimated watermark pattern. In our watermark removal attack, we consider that the attacker has observations with different keys.

Figure 4a. Z-Score and attack success rate (ASR) of watermark stealing on KGW watermark and LLAMA-2-7B model with different watermark keys n.

Figure 4b. Z-Score and attack success rate (ASR) of watermark-removal on KGW watermark and LLAMA-2-7B model with different watermark keys n.

Figure 4c. Perplexity (PPL) of watermark-removal on KGW watermark and LLAMA-2-7B model with different watermark keys n.

As shown in Figure 4a, using multiple keys can effectively defend against watermark stealing attacks. With a single key, the ASR is 91%. We observe that using three keys can effectively reduce the ASR to 13%, and using more than 7 keys, the ASR of the watermark stealing is close to zero. However, using more keys also makes the system vulnerable to our watermark-removal attacks as shown in Figure 4b. When we use more than 7 keys, the detection scores of the content produced by our watermark removal attacks closely resemble those of unwatermarked content and are much lower than the detection thresholds, with ASRs higher than 97%. Figure 4c suggests that using more keys improves the quality of the output content. This is because, with a greater number of keys, there is a higher probability for an attacker to accurately estimate the unwatermarked distribution.

Discussion. Many prior works have suggested using multiple keys to defend against watermark stealing attacks. However, we reveal that a conflict exists between improving resistance to watermark stealing and the feasibility of removing watermarks. Our results show that finding a “sweet spot” in terms of the number of keys to use to mitigate both the watermark stealing and the watermark-removal attacks is not trivial. Given this tradeoff, we suggest that LLM service providers consider “defense-in-depth” techniques such as anomaly detection, query rate limiting, and user identification verification.

Guideline #2. Using a larger number of watermarking keys can defend against watermark stealing attacks, but increases vulnerability to watermark-removal attacks. Limiting users’ query rates can help to mitigate both attacks.

Attacks exploiting public detection APIs

Finally, we show that publicly available detection APIs can enable both spoofing and removal attacks. The insight is that by querying the detection API, the attacker can gain knowledge about whether a specific token is carrying the watermark or not. Thus, the attacker can select the tokens based on the detection result to launch spoofing and removal attacks.

Attack procedure. (i) An attacker feeds a prompt into the watermarked LLM (removal attack) or into a local LLM (spoofing attack), which generates the response in an auto-regressive manner. For the token X_i, the attacker will generate a list of possible replacements for X_i. (ii) The attacker will query the detection using these replacements and sample a token based on their probabilities and detection scores to remove or spoof the watermark while preserving a high output quality. This replacement list can be generated by querying the watermarked LLM, querying a local model, or simply returned by the watermarked LLM (e.g., enabled by OpenAI’s API top_logprobs=5).

Results. We evaluate the detection scores for both the watermark-removal and the spoofing attacks. Furthermore, for the watermark-removal attack, where the attackers care more about the output quality, we report the output PPL.

Figure 5a. Z-Score/P-Value of watermark removal using detection APIs on LLAMA-2-7B and different watermarks.

Figure 5b. The perplexity of watermark removal using detection APIs on LLAMA-2-7B and different watermarks.

Figure 5c. Z-Score/P-Value of watermark spoofing using detection APIs on LLAMA-2-7B and different watermarks.

As shown in Figure 5a and Figure 5b, watermark-removal attacks exploiting the detection API significantly reduce detection confidence while maintaining high output quality. For instance, for the KGW watermark on LLAMA-2-7B model, we achieve a median z-score of 1.43, which is much lower than the threshold 4. The PPL is also close to the watermarked outputs (6.17 vs. 6.28). We observe that the Exp watermark has higher PPL than the other two watermarks. A possible reason is that Exp watermark is deterministic, while other watermarks enable random sampling during inference. Our attack also employs sampling based on the token probabilities and detection scores, thus we can improve the output quality for the Exp watermark. The spoofing attacks also significantly boost the detection confidence even though the content is not from the watermarked LLM, as depicted in Figure 5c.

Defending detection with differential privacy. In light of the issues above, we propose an effective defense using ideas from differential privacy (DP) to counteract the detection API based spoofing attacks. DP adds random noise to function results evaluated on private datasets such that the results from neighboring datasets are indistinguishable. Similarly, we consider adding Gaussian noise to the distance score in the watermark detection, making the detection (epsilon, delta)-DP, and ensuring that attackers cannot tell the difference between two queries by replacing a single token in the content, thus increasing the hardness of launching the attacks.

Figure 6a. Spoofing attack success rate (ASR) and detection accuracy (ACC) without and with DP watermark detection under different noise parameters.

Figure 6b. Z-scores of original text without attack, spoofing attack without DP defense, and spoofing attacks with DP defense. We use the best sigma=4 from Figure 6a.

As shown in Figure 6, with a noise scale of sigma=4, the DP detection’s accuracy drops from the original 98.2% to 97.2% on KGW and LLAMA-2-7B, while the spoofing ASR becomes 0%. The results are consistent for other watermarks and models.

Discussion. The detection API, available to the public, aids users in differentiating between AI and human-created materials. However, it can be exploited by attackers to gradually remove watermarks or launch spoofing attacks. We propose a defense utilizing the ideas in differential privacy. Even though the attacker can still obtain useful information by increasing the detection sensitivity, our defense significantly increases the difficulty of spoofing attacks. However, this method is less effective against watermark-removal attacks that exploit the detection API because attackers’ actions will be close to random sampling, which, even though with lower success rates, remains an effective way of removing watermarks. Therefore, we leave developing a more powerful defense mechanism against watermark-removal attacks exploiting detection API as future work. We recommend companies providing detection services should detect and curb malicious behavior by limiting query rates from potential attackers, and also verify the identity of the users to protect against Sybil attacks.

Guideline #3. Public detection APIs can enable both spoofing and removal attacks. To defend against these attacks, we propose a DP-inspired defense, which combined with techniques such as anomaly detection, query rate limiting, and user identification verification can help to make public detection more feasible in practice.

Conclusion

Although LLM watermarking is a promising tool for auditing the usage of LLM-generated text, fundamental trade-offs exist in the robustness, usability, and utility of existing approaches. In particular, our work shows that it is easy to take advantage of common design choices in LLM watermarks to launch attacks that can easily remove the watermark or generate falsely watermarked text. Our study finds that these vulnerabilities are common to existing LLM watermarks and provides caution for the field in deploying current solutions in practice without carefully considering the impact and trade-offs of watermarking design choices. To establish more reliable future LLM watermarking systems, we also suggest guidelines for designing and deploying LLM watermarks along with possible defenses motivated by the theoretical and empirical analyses of our attacks. For more results and discussions, please see our manuscript.

—

This article was initially published on the ML@CMU blog and appears here with the author’s permission.