Image : ©2024 EPFL – CC-BY-SA 4.0.

By Michael David Mitchell

In neuroscience and biomedical engineering, accurately modeling the complex movements of the human hand has long been a significant challenge. Current models often struggle to capture the intricate interaction between the brain’s motor commands and the physical actions of muscles and tendons. This gap not only hinders scientific progress but also limits the development of effective neuroprosthetics aimed at restoring hand function for individuals suffering from limb loss or paralysis.

Professor Alexander Mathis from EPFL and his team have developed an AI-based approach that advances our understanding of these complex motor functions. The team employed a creative machine learning strategy that combines program-based reinforcement learning with detailed biomechanical simulations.

Mathis’s research presents a detailed, dynamic, and anatomically accurate model of hand movement that draws directly from how humans acquire complex motor skills. This research not only won the MyoChallenge at the NeurIPS Conference in 2022 but the results were also published in the journal Neuron.

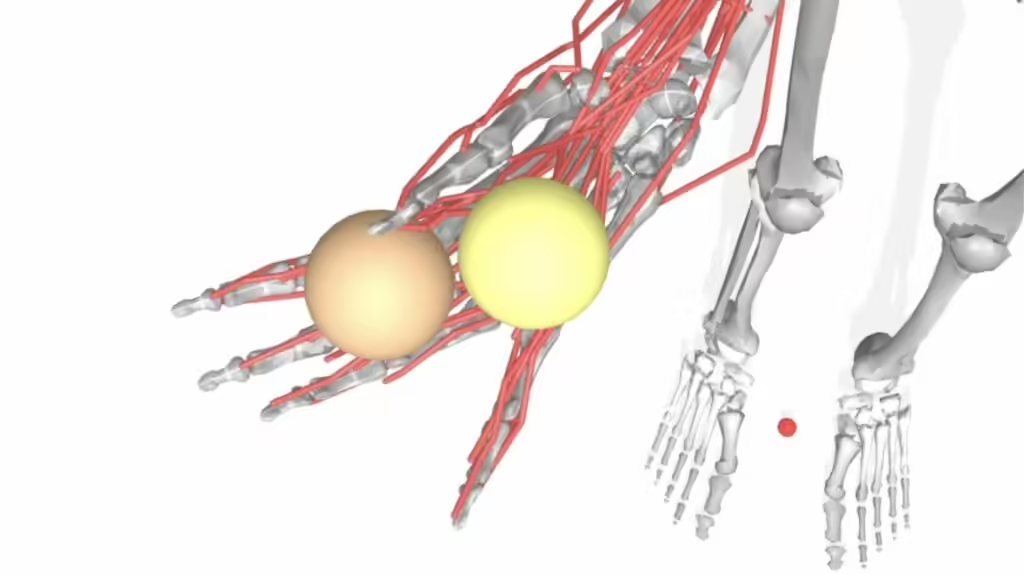

Virtually Controlling Baoding Balls

« What excites me most about this research is that we are delving into the fundamental principles of human motor control, something that has remained a mystery for so long. We are not just building models; we are uncovering the fundamental mechanisms of how the brain and muscles work together, » explains Mathis.

The NeurIPS challenge from Meta motivated the EPFL team to find a new approach to an AI technique known as reinforcement learning. The task was to build an AI capable of accurately manipulating two Baoding balls, each controlled by 39 muscles in a highly coordinated manner. This seemingly simple task is extremely difficult to replicate virtually, given the complex dynamics of hand movements, including muscle synchronization and balance maintenance.

In this highly competitive environment, three graduate students – Alberto Chiappa from Alexander Mathis’s group, Pablo Tano, and Nisheet Patel from Alexandre Pouget’s group at the University of Geneva – significantly outperformed their rivals. Their AI model achieved a 100% success rate in the first phase of the competition, surpassing its closest competitor. Even in the more challenging second phase, their model demonstrated its strength in increasingly difficult situations and maintained a substantial lead to win the competition.

Breaking Tasks into Smaller Parts – and Repeating Them

« To win, we drew inspiration from how humans acquire sophisticated skills in a process known as part training in sports science, » explains Mathis. This part-to-whole approach inspired the program learning method used in the AI model, where the complex task of controlling hand movements was broken down into smaller, manageable parts.

« To overcome the limitations of current machine learning models, we applied a method called curriculum learning. After 32 steps and nearly 400 hours of training, we successfully trained a neural network to accurately control a realistic model of the human hand, » says Alberto Chiappa.

One of the main reasons for the success of this model lies in its ability to recognize and utilize basic, repeatable movement patterns, known as motor primitives. In an exciting scientific twist, this approach to learning behavior could shed light on neuroscience regarding the brain’s role in determining how motor primitives learn to master new tasks. This complex interaction between brain and muscle manipulation shows how challenging it can be to build machines and prosthetics that truly mimic human movement.

« It takes a lot of movement and a model that resembles a human brain to accomplish various daily tasks. Even though each task can be broken down into smaller parts, each task requires a different set of these motor primitives to be executed well, » explains Mathis.

Harnessing AI in Exploring and Understanding Biological Systems

Silvestro Micera, a leading researcher in neuroprosthetics at EPFL’s Neuro X Institute and a collaborator of Mathis, emphasizes the crucial importance of this research in understanding the future potential and current limitations of even the most advanced prosthetics. « What we really lack at the moment is a deeper understanding of how finger movement and grasp motor control are achieved. This work goes exactly in this very important direction, » notes Micera. « We know how important it is to connect the prosthesis to the nervous system, and this research provides us with a solid scientific foundation that strengthens our strategy. »

Abigail Ingster, an undergraduate student at the time of the competition and recipient of the EPFL Summer in the Lab scholarship, played a central role in policy analysis. Thanks to her scholarship supporting practical research experience, Abigail worked closely with Ph.D. student Alberto Chiappa and Professor Mathis to delve into the complex workings of AI’s learned policy.

Read the Full Work

Acquiring Musculoskeletal Skills via Program-Based Reinforcement Learning, Alberto Silvio Chiappa, Pablo Tano, Nisheet Patel, Abigail Ingster, Alexandre Pouget, and Alexander Mathis Neuron (2024).

EPFL