Learning to Set the Regularization Parameter in Pharmaceutical Regression Applications

By Dravyansh Sharma

Overview

In the simplest form, the relationship between a real dependent variable ( y ) and a vector of features ( X ) can be modeled linearly as ( y = \beta X ). Given training data consisting of pairs of features and dependent variables ((X_1, y_1), (X_2, y_2), \ldots, (X_m, y_m)), the goal is to learn ( \beta ) to predict ( y’ ) from unseen features ( X’ ). This process, known as linear regression, is widely used across various fields for making reliable predictions. Linear regression is a supervised learning algorithm known for its low variance and good generalization properties, even with small datasets. To prevent overfitting, regularization is used to modify the objective function, reducing the impact of outliers and irrelevant features.

The most common method for linear regression is "regularized least squares," which minimizes:

[ | y – \beta X |_2^2 + \lambda | \beta | ]

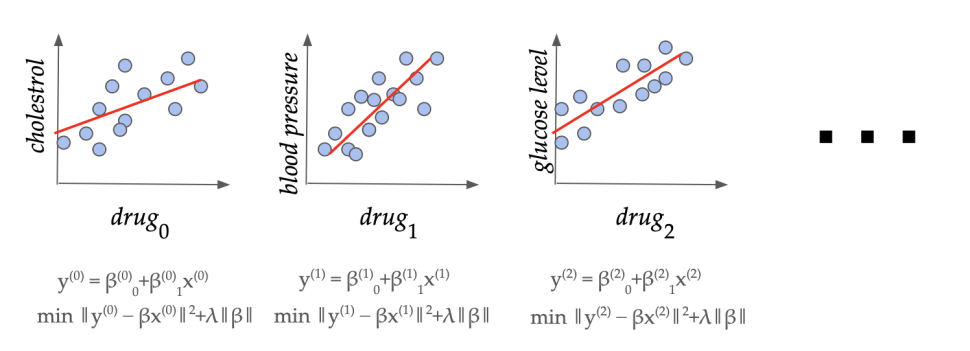

Here, the first term captures the error on the training set, and the second term is a norm-based penalty to avoid overfitting. Setting ( \lambda ) appropriately is crucial and depends on the data domain, a longstanding open question in the field. With access to similar datasets from the same domain, such as multiple drug trial studies, we can learn a good domain-specific value of ( \lambda ) with strong theoretical guarantees of accuracy on unseen datasets.

Problem Setup

Linear regression with norm-based regularization is a staple in statistics and machine learning courses, used for data analysis and feature selection. The regularization penalty typically involves weighted norms of the learned linear model ( \beta ), where the weight is selected by a domain expert. This approach, known as the Elastic Net, combines L1 and L2 regularization, incorporating the advantages of both Ridge regression and LASSO.

Despite their importance, setting these coefficients in a principled way has been challenging. Common practice involves "grid search" cross-validation, which is computationally intensive and lacks theoretical guarantees on performance with unseen data. Our work addresses these limitations in a data-driven setting.

The Importance of Regularization

Regularization coefficients ( \lambda_1 ) and ( \lambda_2 ) play a crucial role across fields. In machine learning, they provide generalization guarantees and prevent overfitting. In statistical data analysis, they yield parsimonious and interpretable models. In Bayesian statistics, they correspond to specific priors on ( \beta ). Setting these coefficients too high can cause underfitting, making their selection crucial, especially in the Elastic Net, where trade-offs between sparsity, feature correlation, and bias must be managed.

The Data-Driven Algorithm Design Paradigm

In the age of big data, many fields record and store data for pattern analysis. For example, pharmaceutical companies conduct numerous drug trials. This motivates the data-driven algorithm design setting, introduced by Gupta and Roughgarden, which designs algorithms that work well on typical datasets from an application domain. We apply this paradigm to tune regularization parameters in linear regression.

The Learning Model

We model data from the same domain as a fixed distribution ( D ) over problem instances. Each instance consists of training and validation splits. The goal is to learn regularization coefficients ( \lambda ) that generalize well over unseen instances drawn from ( D ). We seek the value of ( \lambda ) that minimizes the expected loss on unseen test instances.

How Much Data Do We Need?

The model ( \beta ) depends on both the dataset ((X, y)) and the regularization coefficients ( \lambda_1, \lambda_2 ). We analyze the "dual function," the loss expressed as a function of the parameters for a fixed problem instance. For Elastic Net regression, the dual is the validation loss on a fixed validation set for models trained with different values of ( \lambda_1, \lambda_2 ). We show that the dual loss function exhibits a piecewise structure, with polynomial boundaries and rational functions within each piece. This structure allows us to establish a bound on the learning-theoretic complexity of the dual function, specifically its pseudo-dimension.

Theorem

The pseudo-dimension of the Elastic Net dual loss function is ( \Theta(p) ), where ( p ) is the feature dimension. This bound implies that ( O(1/\epsilon^2 (p + \log 1/\delta)) ) problem samples are sufficient to learn regularization parameters within ( \epsilon ) of the best possible parameter with high probability.

Sequential Data and Online Learning

In a more challenging variant, problem instances arrive sequentially, and parameters must be set using only previously seen instances. Assuming prediction values are drawn from a bounded density distribution, we show that the dual loss function’s piece boundaries do not concentrate in a small region of the parameter space. This implies the existence of an online learner with average expected regret ( O(1/\sqrt{T}) ).

Extension to Binary Classification

For binary classification, where ( y ) values are 0 or 1, regularization is crucial to avoid overfitting. Logistic regression replaces the squared loss with the logistic loss function. We use a proxy dual function to approximate the true loss function, showing that the generalization error with ( N ) samples is bounded by ( O(\sqrt{(m^2 + \log 1/\epsilon)/N} + \epsilon) ) with high probability.

Beyond the Linear Case: Kernel Regression

For non-linear relationships, Kernelized Least Squares Regression maps input ( X ) to high-dimensional feature space using the "kernel trick." We show that the pseudo-dimension of the dual loss function in this case is ( O(m) ), where ( m ) is the size of the training set.

Final Remarks

We address tuning norm-based regularization parameters in linear regression, extending our results to online learning, linear classification, and kernel regression. Our research opens up the exciting question of tuning learnable parameters in continuous optimization problems, capturing scenarios with repeated data instances from the same domain.

For further details, check out our papers linked below:

- Balcan, M.-F., Khodak, M., Sharma, D., & Talwalkar, A. (2022). Provably tuning the ElasticNet across instances. Advances in Neural Information Processing Systems, 35.

- Balcan, M.-F., Nguyen, A., & Sharma, D. (2023). New Bounds for Hyperparameter Tuning of Regression Problems Across Instances. Advances in Neural Information Processing Systems, 36.

—

This article was initially published on the ML@CMU blog and appears here with the authors’ permission.