By Yangzhen Wu, Zhiqing Sun, Shanda Li, Sean Welleck, Yiming Yang

Our CMU-MATH team recently secured 2nd place in the Artificial Intelligence Mathematical Olympiad (AIMO), competing against 1,161 teams and winning a prize of ![]() ! This competition aims to advance AI in solving mathematical problems, with the ultimate goal of creating an AI model capable of winning a gold medal in the International Mathematical Olympiad (IMO). Read on to learn about our winning strategy in this prestigious contest.

! This competition aims to advance AI in solving mathematical problems, with the ultimate goal of creating an AI model capable of winning a gold medal in the International Mathematical Olympiad (IMO). Read on to learn about our winning strategy in this prestigious contest.

Background: The AIMO Competition

The Artificial Intelligence Mathematical Olympiad (AIMO) Prize, initiated by XTX Markets, is a groundbreaking competition designed to push the boundaries of AI in mathematical problem-solving. The advisory committee includes Fields Medal winners Timothy Gowers and Terence Tao. Attracting world-class mathematicians and machine learning researchers, AIMO sets a new standard for excellence in the field.

AIMO has introduced a series of progress prizes, starting with a Kaggle competition featuring 50 hidden test problems. These problems are as challenging as the AMC12 and AIME exams for the USA IMO team pre-selection. The private leaderboard determined the final rankings, distributing ![]() from the one-million dollar prize pool among the top five teams. Each solution was allocated either a P100 GPU or 2xT4 GPUs, with up to 9 hours to solve the 50 problems.

from the one-million dollar prize pool among the top five teams. Each solution was allocated either a P100 GPU or 2xT4 GPUs, with up to 9 hours to solve the 50 problems.

To give an idea of the problem difficulty, AIMO provided a 10-problem training set open to the public. Here are two example problems:

- Each of the three-digit numbers

to

to  is colored blue or yellow such that the sum of any two yellow numbers equals a blue number. What is the maximum possible number of yellow numbers?

is colored blue or yellow such that the sum of any two yellow numbers equals a blue number. What is the maximum possible number of yellow numbers?

The first problem involves analytic geometry, requiring the model to understand geometric objects and perform symbolic computations. The second problem, in extremal combinatorics, demands creative thinking to exploit the problem’s structure. These problems are challenging even for humans, let alone language models.

Overall, AIMO problems were significantly more challenging than those in GSM8K, a standard mathematical reasoning benchmark for LLMs, and as difficult as the hardest problems in the MATH dataset. The limited computational resources—P100 and T4 GPUs, both over five years old—posed an additional challenge, making it crucial to employ appropriate models and strategies to maximize accuracy within the constraints.

Our Winning Formula

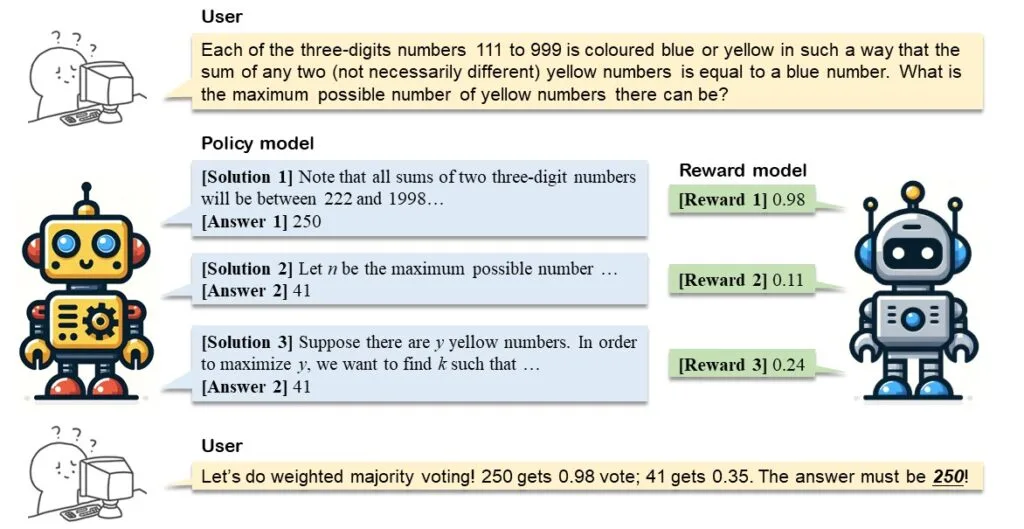

Unlike most teams that relied on a single model, we used a dual-model approach. Our final solutions were derived through a weighted majority voting system, generating multiple solutions with a policy model and assigning weights using a reward model. This strategy, based on our study on compute-optimal inference, consistently outperforms naive majority voting given the same inference budget.

Both models were fine-tuned from the DeepSeek-Math-7B-RL checkpoint. Below, we detail the fine-tuning process and inference strategies.

Policy Model: Program-Aided Problem Solver Based on Self-Refinement

The policy model served as the primary problem solver. We noted that LLMs can perform mathematical reasoning using both text and programs. Natural language excels in abstract reasoning but falls short in precise computation. Programs, however, are adept at rigorous operations. To harness both methods, we implemented the Program-Aided Language Models (PAL) or Tool-Augmented Reasoning (ToRA) approach. The model first generates rationales in text form, followed by a computer program to derive a numerical answer.

We needed a suitable problem set with « ground truth » solutions in ToRA format for supervised fine-tuning. We used a combination of AMC, AIME, and Odyssey-Math, resulting in a dataset of 2,600 problems. We prompted GPT-4o (and DeepSeek-Coder-V2) to generate 64 solutions for each problem, retaining those that led to correct answers. Our final dataset contained 41,160 problem-solution pairs. We performed supervised fine-tuning on the DeepSeek-Math-7B-RL model for 3 epochs with a learning rate of 2e-5.

During inference, we employed the self-refinement technique, providing feedback to the policy model on the execution results of the generated program and allowing the model to refine the solution accordingly.

Below is our ablation study on the techniques we employed for the policy model, using accuracy on a selected subset of the MATH test set as the evaluation metric.

| Model | Output Format | Inference Strategy | Accuracy |

|---|---|---|---|

| DeepSeek RL 7b | Text-only | Greedy decoding | 54.02% |

| DeepSeek RL 7b | ToRA | Greedy decoding | 58.05% |

| DeepSeek RL 7b | ToRA | Greedy + Self-refine | 60.73% |

| DeepSeek RL 7b | ToRA | Maj@16 + Self-refine | 70.46% |

| DeepSeek RL 7b | ToRA | Maj@64 + Self-refine | 72.48% |

| Our finetuned model | ToRA | Maj@16 + Self-refine | 74.50% |

| Our finetuned model | ToRA | Maj@64 + Self-refine | 76.51% |

Notably, the first-place team also used ToRA with self-refinement but curated a much larger problem set of 60,000 problems using GPT-4 to generate solutions. Their dataset was more than 20x larger than ours. The cost to generate solutions was far beyond our budget as an academic team (over ![]() based on our estimation). Our problem set was based purely on publicly available data, and we spent only ~

based on our estimation). Our problem set was based purely on publicly available data, and we spent only ~![]() for solution generation.

for solution generation.

Reward Model: Solution Scorer Using Label-Balance Training

While the policy model was a creative problem solver, it could sometimes produce incorrect solutions. On the publicly available 10-problem training set, our policy model only correctly solved two problems using standard majority voting with 32 sampled solutions. For another 2 problems, the model generated correct solutions that failed to be selected due to wrong answers dominating in majority voting.

This observation highlighted the potential of the reward model. The reward model was a solution scorer that took the policy model’s output and generated a score between 0 and 1. Ideally, it assigned high scores to correct solutions and low scores to incorrect ones, aiding in the selection of correct answers during weighted majority voting.

The reward model was fine-tuned from a DeepSeek-Math-7B-RL model on a labeled dataset containing both correct and incorrect problem-solution pairs. We utilized the same problem set as for the policy model training and expanded it by incorporating problems from the MATH dataset with integer answers. Simple as it may sound, generating high-quality data and training a strong reward model was non-trivial. We considered the following two essential factors for the reward model training set:

- Label balance: The dataset should contain both correct (positive examples) and incorrect solutions (negative examples) for each problem, with a balanced number of correct and incorrect solutions.

- Diversity: The dataset should include diverse solutions for each problem, encompassing different correct approaches and various failure modes.

Sampling solutions from a single model cannot meet those factors. For example, while our fine-tuned policy model achieved very high accuracy on the problem set, it was unable to generate any incorrect solutions and lacked diversity amongst correct solutions. Conversely, sampling from a weaker model, such as DeepSeek-Math-7B-Base, rarely yielded correct solutions. To create a diverse set of models with varying capabilities, we employed two novel strategies:

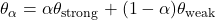

- Interpolate between strong and weak models. For MATH problems, we interpolated the model parameters of a strong model (DeepSeek-Math-7B-RL) and a weak model (DeepSeek-Math-7B-Base) to get models with different levels of capabilities. Denote by

and

and  the model parameters of the strong and weak model. We considered interpolated models with parameters

the model parameters of the strong and weak model. We considered interpolated models with parameters  and set

and set  , obtaining 8 models. Those models exhibited different problem solving accuracies on MATH. We sampled two solutions from each model for each problem, yielding diverse outputs with balanced correct and incorrect solutions. This technique was motivated by the research on model parameter merging (e.g., model soups) and represented an interesting application of this idea, i.e., generating models with different levels of capabilities.

, obtaining 8 models. Those models exhibited different problem solving accuracies on MATH. We sampled two solutions from each model for each problem, yielding diverse outputs with balanced correct and incorrect solutions. This technique was motivated by the research on model parameter merging (e.g., model soups) and represented an interesting application of this idea, i.e., generating models with different levels of capabilities. - Leverage intermediate checkpoints. For the AMC, AIME, and Odyssey, recall that our policy model had been fine-tuned on those problems for 3 epochs. The final model and its intermediate checkpoints naturally provided us with multiple models exhibiting different levels of accuracy on these problems. We leveraged these intermediate checkpoints, sampling 12 solutions from each model trained for 0, 1, 2, and 3 epochs.

These strategies allowed us to obtain a diverse set of models almost for free, sampling varied correct and incorrect solutions. We further filtered the generated data by removing wrong solutions with non-integer answers since it was trivial to determine that those answers are incorrect during inference. In addition, for each problem, we maintained equal numbers of correct and incorrect solutions to ensure label balance and avoid a biased reward model. The final dataset contains 7000 unique problems and 37880 labeled problem-solution pairs. We finetuned DeepSeek-Math-7B-RL model for 2 epochs with learning rate 2e-5 on the curated dataset.

Source

Source